The Document Accuracy Layer: the missing control point for regulated AI

A Document Accuracy Layer is the critical foundation for defensible AI, audit-ready compliance, and long-term records preservation. Whether you’re enabling RAG, automating document processing, or meeting retention requirements like preservation-grade PDF/A, a Document Accuracy Layer helps ensure your AI and compliance programs are built on trusted, verifiable documents, not unreliable inputs.

Enterprise AI has a document problem.

Not “we need more PDFs.” Not “we need better prompts.” The real problem is that regulated work still runs on documents (clinical packages, claims files, inspection reports, engineering drawings, controlled records) and those documents arrive in every format and quality level imaginable. Even when organizations have modern systems, the underlying inputs are often a mix of scanned images, messy PDFs, Office files with embedded objects, emails, CAD drawings, and decades-old archives.

When you point AI at that reality without an accuracy control point, you don’t just get messy outputs. You get risk: decisions that can’t be defended, audits that take weeks, exceptions that overwhelm operations, and “human review forever” as the only safety net.

This is the role of the Document Accuracy Layer.

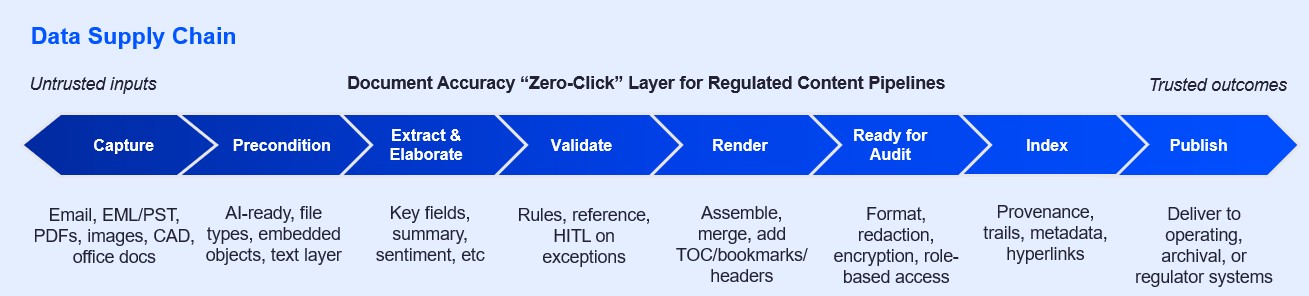

A Document Accuracy Layer is the upstream layer that turns document chaos into trusted inputs for downstream systems, like IDP, search, analytics, ECM/RIM, and AI (including RAG). It’s the part of the architecture that makes documents machine-navigable and outcomes verifiable, not just “converted.”

It’s the layer that ingests messy multi-format content and produces compliant, searchable outputs and high-quality structured data that downstream systems can trust.

Think of it as the “refinery” in your information supply chain:

This is not a nice-to-have “pre-processing step.” In regulated environments, it’s a control layer that protects three things executives care about: AI reliability, compliance defensibility, and long-term preservation.

RAG systems, copilots, and extraction pipelines are only as good as the content you feed them. If your source documents are missing pages, have broken tables, have no reliable text layer, or contain unrendered embedded objects, you get predictable failure modes:

The Document Accuracy Layer produces machine-navigable pipelines by doing things like fidelity-preserving rendering, advanced OCR, chunking with citation anchors, structured data contracts, and validation against business/compliance rules.

In practice, that means downstream AI has a fighting chance to be:

Regulated AI programs need more than model confidence scores. They need repeatable, reviewable evidence that outputs meet defined standards.

That’s why Adlib’s latest release emphasizes document accuracy and trust controls like:

Those are the kinds of controls that let a regulated team say: “Here’s what we processed, how we processed it, what the system believed, what rules were applied, and what required human validation.”

A common failure pattern in highly regulated organizations: teams try to “fix compliance” at the end of the workflow. They clean up files right before an audit, right before submission, or right before archiving.

That’s expensive, slow, and risky.

A Document Accuracy Layer changes the posture: it creates audit-ready records in the flow of work by combining capture, classification, validation, and rendering upstream, so teams don’t have to do “records cleanup” as a separate project later.

This is particularly relevant when:

In regulated work, “we converted it” isn’t enough. You need to validate that the document is complete, readable, correctly structured, and consistent with policy.

Checking things like:

That’s how compliance teams move from reactive inspection to proactive control.

Many regulated organizations have retention schedules measured in years, or decades. “We’ll just store the originals” isn’t a strategy when the original format may not be readable in 10–20 years, the authoring application is deprecated, or the content contains proprietary objects.

Enterprises in regulated industries are explicit about this pain:

This is the quiet risk in many AI programs: the same documents you want to use for AI today must also remain authentic, accessible, and renderable years from now. Preservation-grade outputs support both.

In regulated environments, fidelity matters because layout is meaning: signatures, tables, footers, diagrams, annotations, and embedded objects can change interpretation. Long-term preservation compliance is fundamentally about ensuring that what you archive is what you can later prove.

“Pixel-perfect” rendering and preservation-grade outputs such as PDF/PDF-A are a core part of compliance confidence.

When you combine that with provenance trails, retention metadata, and controlled publishing into ECM/RIM, you get something more valuable than “storage”: you get future-proof compliance evidence.

If you operate in life sciences, insurance, energy, public sector, or other regulated environments, the Document Accuracy Layer is a force multiplier because it supports three strategic outcomes at once:

And importantly: it lets you modernize without ripping out the systems you already have, because the layer integrates upstream/downstream via connectors and APIs into ECM/RIM, case systems, data lakes, and AI infrastructure.

Most organizations try to make AI trustworthy at the very end: prompt constraints, policy overlays, “human review,” and governance committees. Those matter, but they don’t solve the core issue if your documents are inconsistent, incomplete, and unvalidated.

A Document Accuracy Layer solves the root cause by treating documents as what they really are in regulated industries: evidence.

When your pipeline can produce fidelity-preserving, validated, machine-navigable, preservation-grade outputs with measurable trust controls, you get more than better automation. You get a foundation for AI that your compliance, legal, and operational teams can live with for the next decade.

Take the next step with Adlib to streamline workflows, reduce risk, and scale with confidence.